FairDetect: Advanced AI Bias Detection

Project Details

- Category: AI & Machine Learning

- Project Date: Aug 2022

- GitHub: FairDetect GitHub

- Google AI: What if tool

Description

FairDetect is dedicated to identifying and reducing biases in AI models. Utilizing synthetic and real-world datasets, it ensures AI decisions are fair and unbiased, promoting ethical AI practices.

Key Features

- Bias Detection in Synthetic Data: Uses controlled datasets to understand and mitigate biases.

- Real-World Application: Analyzes insurance data to uncover and address biases in practical AI implementations.

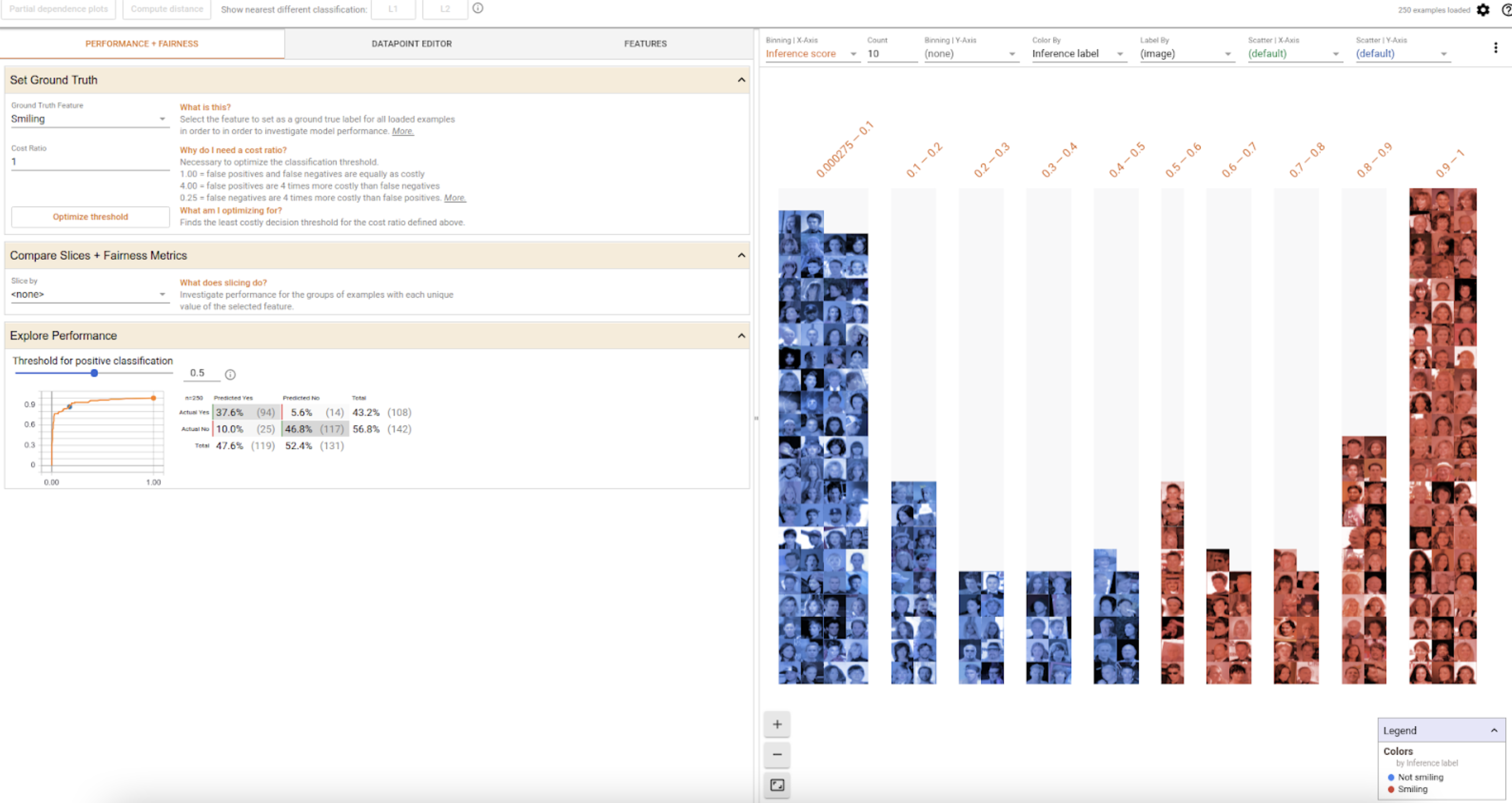

- Advanced Visualization: Presents bias detection results through clear and comprehensive visualizations.

- FairML and Google Fairness Indicators (What if): Utilizes these tools to assess and ensure model fairness.

Benefits

FairDetect enhances the reliability and fairness of AI models, providing robust tools for bias detection and mitigation. This project ensures equitable AI-driven decisions, fostering trust in AI technologies and promoting ethical practices.